BioLogica Research

Just as BioLogica embodies phenomena of genetics in a multilevel hypermodel, our research seeks to construct and elaborate a multilevel model of learning with hypermodels in science classrooms. We integrate research findings and methodologies from cognitive psychology, science education, instructional technology and educational psychology to create interventions and assessments that instantiate theoretical concepts. Our research requires the support of technology not only for creating the interventions and assessments, but also for collecting and analyzing the data generated when learners use BioLogica.

Conceptual Framework

The importance of models and modeling in scientific research has been widely documented . Models are used both to describe scientific phenomena and to generate testable hypotheses . Today, models and modeling are considered essential components of scientific literacy . With the importance of models and modeling to science education comes the need for a coherent theory of model-based teaching and learning (MBTL).

We have been working on such a theory during the last decade. Our basic hypothesis is that understanding biological phenomena requires learners to construct, elaborate and revise mental models of the phenomena under study.

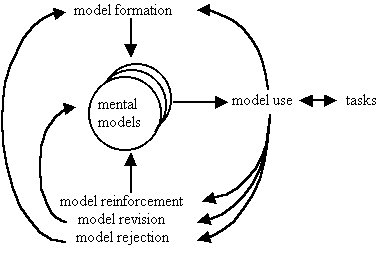

Figure1: Model-Based Learning Framework

In response to task demands, learners who are engaged in model-based learning construct models from prior knowledge and new information. If the model enables them to perform the task successfully the model is reinforced. However, if the model does not enable successful performance, the model may be rejected and a new one constructed. Or the model may be revised or elaborated prior to another attempt . Such model-based learning results in knowledge that is integrated, usable and extensible in the domain. The model-based learning framework shown in Figure 1 represents the cognitive core of the learner level our model of classroom learning. In addition, there are learner beliefs that influence the effort a learner chooses to invest in the task and in participation in the classroom culture, which presents not only a variety of tasks but also participation structures affecting a learner's interactions with phenomena, resources, tools, and other learners. These factors along with commonly held beliefs constitute the classroom culture and therefore are key elements in the classroom level of our developing multilevel model of learning with hypermodels.

Buckley (1992) documented and contrasted the learning goals, gains, and strategies of two students in a classroom of 28 students who were using an interactive multimedia resource about the circulatory system. Through microanalysis of multiple forms of data Buckley created a rich description of model-based learning situated in a technology-rich high school biology classroom. The mental models of the learner were described as models embedded in models forming an anatomical hierarchy. This study grounded the development of an initial model of model-based learning. Key elements in the model are prior knowledge, interpretation of the assigned task, mindfully seeking out and integrating necessary information about parts, processes and mechanisms, then evaluating both the new information and existing models prior to integration. Buckley (2000) extended the initial model to include the role of representations in model-based learning. Using a framework developed by Buckley & Boulter representations in the interactive multimedia resource were analyzed for their potential contributions to the learner's correct and incorrect conceptions. The interaction between the learner's existing models and the representations was documented from classroom discourse.

Representations, whether discourse, text, diagrams, animations, gestures or linked, multilevel hypermodels, are an essential element of model-based teaching and learning. Representations and the phenomena they re-present are the links between the internal cognitive processes and the external sociocultural processes that enable learning in classrooms . The use of representations is often problematic in that the 'reader' often misses the intent of the 'writer' . However, many studies have examined the effectiveness of different types of representations in supporting learning (c.f. . Gobert and Clement (1999, NSF# 9150002) studied the differential benefits of student-generated models versus summaries and the benefits of student-generated models versus explanations on rich learning (Gobert, 1997). It was found that rich tasks like modeling and explaining, which require inference-making, lead to deeper understanding of content area than lower-level tasks such as summarizing. Gobert (2000, NSF# 9980600) studied the causal reasoning associated with models of varying levels of causal integration and the visual/spatial inferences afforded on the basis of different models. We found that reasoning on the basis of models that were spatially correct in terms of the relative placement of the layer inside the earth afforded visual inferences; alternatively spatially "incorrect" models could not support inference-making until remediated.

Prior Research

Technology enables the creation of visuals and models of phenomena that simulate the structures and behaviors of phenomena as well as providing the opportunity for learners to interact with the elements of the models on a variety of tasks. For example, Horwitz and White (1988) developed the ThinkerTools software that helped sixth graders formulate models involving the action of forces in two dimensions. They did a careful study of the students� misconceptions prior to instruction and administered a post-test designed to probe students' misconceptions. The sixth graders scored significantly higher on the post-test than high school physics students in the same school system.

However, as prior research has demonstrated (Buckley, 1992; Christie, 1999; Horwitz & Christie, 1999), providing learners with representations and models for normally inaccessible phenomena may be a necessary condition to facilitate science learning of this kind, yet it is not sufficient one. In Buckley's (1992) study only one student in a class of 28 engaged in model-building where all had access to a rich interactive multimedia resource about the circulatory system. In Horwitz & Christie�s (1999) work with GenScopeä , the precursor to BioLogicaä , that provided high school science students with an interactive, exploratory, and constructivist genetics hypermodel, few learners demonstrated the hoped-for gains in understanding and reasoning as measured by statistical differences in pre- and post-tests. However, many GenScopeä students demonstrated an ability to correctly solve difficult genetics problems in-situ as well as an increased proper use of scientific language to explain their reasoning (Christie, 1997).

Follow-on work documented students� beliefs that these problem-solving experiences were very unlike their prior science class learning experiences. Students went so far as to characterize their GenScopeä learning experiences as "somethin� more than learnin�." In particular, GenScopeä experiences gave students "time to think" and "time to talk" (Christie, 1999). Christie�s (1999, 2000, 2001) qualitative research on perceptions of technology-supported science learning experiences suggests that certain technologies challenge students� prior beliefs about the meaning of key educational concepts, such as learning, success, and failure. Moreover, it suggests that students organize their thoughts about academic experiences in concert with particular instructional activities (e.g., reading, writing, talking, or doing) and the "achievement resources" (e.g., books, computers) available or required for participation in such instruction (Christie, 2001; 1999; Hickey, Kindfield, Horwitz & Christie, 2000). Therefore, qualitative methods are critical in understanding technology-supported learning processes and subsequent learning or achievement outcomes (Christie, 2001; 2000; 1997).

We attribute these and other such findings (Christie, 2001) in part to the cultural changes that emerge in a classroom setting as a direct result of the presence of technologies that challenge existing models of teaching and learning. This point is critical and worth repeating: Although new technologies do not necessarily produce statistically significant improvements in learning outcomes, their presence alone can shake up commonly held beliefs about what constitutes teaching, learning, and success or failure in the classroom. We refer to these commonly held beliefs and the behaviors that co-exist with them as "classroom culture."

Research Design:

From prior research we know that providing access to hypermodels is not sufficient for learning, but we have some ideas about what is needed to foster learning and transfer when learners use BioLogica. However, we also have lots of questions about how well these ideas work in real classrooms with real students. For example, in the table below are the research questions we are posing and the data we will use to answer those questions.